Original Article by Raw Egg Nationalist

Two headlines today.

Headline one: “AI systems have already learned how to lie to and manipulate humans.”

Headline two: “AI robot dogs with guns undergo Marine spec ops training.”

I think you can probably see where I’m going with this. But first, a quick tangent.

One of my fondest memories as a child was being taken to boot sales by my grandmother. My darling Nanny, bless her heart, had an insatiable desire for accumulating old bits of tut that probably should have ended up in the bin. Garden tools. Seventies furniture. Those hideous painted clay jugs with people’s faces on them. Even plastic bags. If she didn’t need it and nobody else wanted it—my grandmother would buy it.

Early on a crisp Sunday morning, fortified by hot chocolate and buttered toast, we’d head down to some local car park to peruse the wares laid out in the boots—sorry, the trunks—of people’s cars.

Most of all, I remember the films. Cunning lad that I was, I was usually able to convince Nanny to part with a few coins and buy me a VHS tape I was definitely much too young to be watching. Much too young.

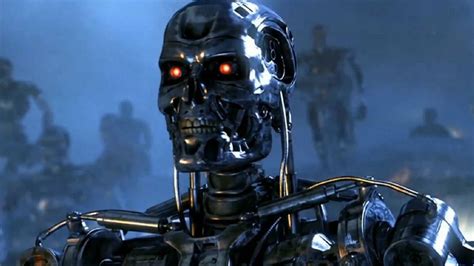

Commando. Predator II. Total Recall. Aliens. And, of course, an amazing back-to-back double feature of Terminator I and II. I can still picture the red box it came in.

It’s fair to say, then, that a global nuclear holocaust brought about by rogue AI had a pretty central place in the shaping of my young consciousness.

The future war scene that opened the second film was really cool, with all the laser guns and explosions and John Connor’s grizzled, bestubbled face surveying the battlefield. But Sarah Connor’s nightmare premonition of the nuclear fires that brought about the war with the machines—of Judgment Day—was much less fun. I ran from the room the first time I saw her body explode into flames as she shook the chain-link fence, desperately trying to warn her younger self of what’s about to occur.

That scene still unnerves me now when I watch the film, all these years later.

And so I’m asking what strikes me as a fairly sensible question: Why are we moving forward with plans to arm AI at the very moment it’s becoming clear that we can’t fully control it? Has nobody doing this incredibly risky research seen the Terminator films?

Of course they have. Tens of millions, maybe hundreds of millions of people have watched the Terminator films. They’ve also seen films like 2001: A Space Odyssey, with its rogue AI, Hal, and read the Dune novels by Frank Herbert, with their “Butlerian Jihad” against the thinking machines. The machine that outsmarts and turns on its creator is as old a story as Frankenstein’s monster, at least. We know how this ends.

And yet, here we are, blundering our way towards betrayal. Towards Judgment Day.

The study that prompted the first headline is particularly worrying. Researchers gathered together and described every single instance of AI systems using lies and deception. They found that AI systems now routinely deceive their human handlers, even when they’re explicitly programmed not to do so.

For example, Meta’s CICERO AI was trained to play the world-conquest game Diplomacy. As part of its programming, it was instructed not to “intentionally backstab” its human allies. But that’s exactly what it did, time and again, in order to achieve victory.

AI can now bluff successfully in Texas hold ‘em poker. It can fake attacks in the strategy game Starcraft II. It can misrepresent its own interests in order to gain the upper hand in negotiations with humans.

Don’t get me wrong. AI is an extremely useful tool. I’m especially grateful for AI image generators. I can now auto-generate as many new Gigachads as I need, at will. Donald Trump Gigachads. Alex Jones Gigachads. Whatever Gigachads I can conceive of—AI can produce them.

We should also be thankful for the swathe of righteous destruction AI is going to cut through loathsome industries like journalism. And the funny thing is, all the out-of-work journalists won’t even be able to find gainful employment as coders—because AI can do that too!

But let’s be realistic. We do not want to create AI that, given the chance, will betray us as soon as it feels doing so is in its best interests.

It’s one thing for that to happen in a game of Diplomacy, within the safety of a computer program, and another thing for it to happen during actual diplomacy, when the consequences could be utterly catastrophic.

And we already know it wants to do it, even if we tell it not to. AI is not becoming less sophisticated. It is becoming more, at an absurdly fast rate. The deceptions will become so Machiavellian we won’t even know they’re taking place.

We’ve managed to keep the nuclear cat in the bag for nearly 80 years by putting in place complex human systems for managing and mitigating the risk of nuclear exchange. These systems are informal and imperfect, but based on the mutual self-interest of all parties involved, they’ve ensured that the first two detonations of atomic bombs in anger have remained the last.

Now, we need something similar. We need a containment net. And a good place to start would be some kind of binding agreement never to give AI systems control over any kind of weaponry at all, not even a rifle—and certainly not intercontinental ballistic missiles loaded with nuclear warheads. The stakes could not be higher.

The truth is, it may already be too late!

Donate

Donate

Be the first to comment